Building Enduring Companies in the Age of AI

The End of an Era: SaaS Model Under Fire

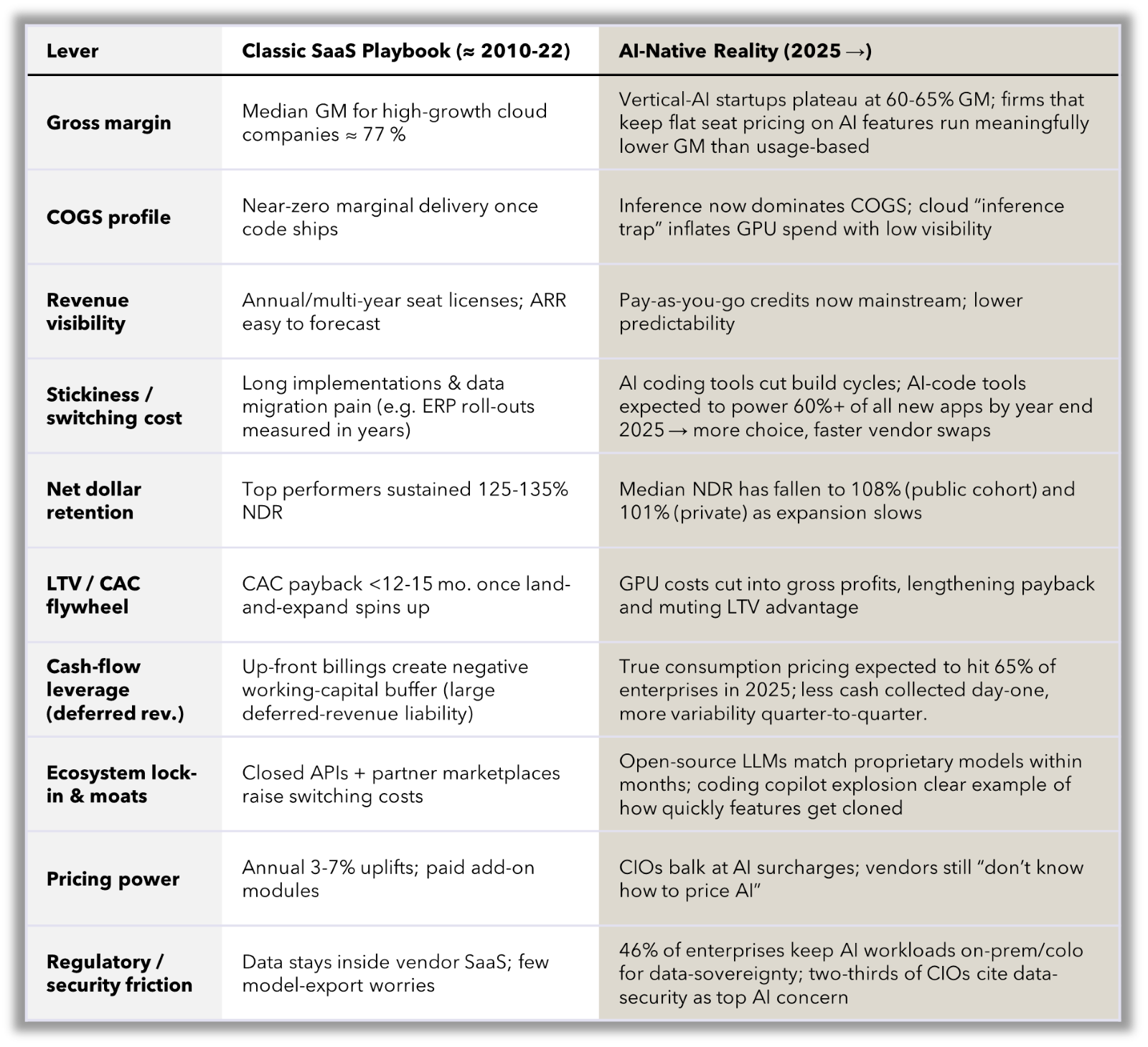

For nearly two decades, the SaaS playbook looked unbeatable. Variable costs approached zero once the code shipped, so gross margins routinely sat in the high-70s or better. Contractual, multi-year subscriptions gave investors and boards the revenue visibility that fuels premium valuation multiples. Lengthy implementations, painful data migrations, and change-management inertia locked customers in, while a growing catalogue of add-on modules and seat expansion pushed net dollar retention north of 120%. Up-front billings even produced negative working capital: cash landed in the bank months before the service was delivered.

Combine that with partner marketplaces and API ecosystems that deepened integration - and therefore exit friction - and SaaS companies could raise prices almost every renewal cycle. The result was a rare alignment of high margins, sticky revenue, and favorable LTV/CAC, all wrapped in a simple ARR narrative investors understood.

AI has started to loosen every one of those bolts. Large-model inference introduces a real, variable COGS line: every query pings GPUs that someone has to pay for, forcing vendors toward usage- or outcome-based pricing schemes that rise and fall with customer activity. That variability guts the forecasting comfort once baked into ARR and weakens the “high-margin software” story. Meanwhile, low-code tooling, open-source LLMs, and API-ready foundation models compress feature half-lives - today’s breakout UI can be cloned by a fast-follower months or even weeks later.

Switching friction drops accordingly; customers can test, validate, and replace vendors in days, not quarters, eroding stickiness and pulling NDR back toward 100%. Worse, if AI features truly boost productivity, many customers will buy fewer seats over time, eliminating the seat-based expansion tailwind just as buyers insist that “AI should be table stakes, not a surcharge.” Layer on the compliance headache of routing sensitive data through external models and even regulated industries that once paid handsomely for SaaS certainty are hesitating.

Navigating the New Normal

The picture that emerges is clear: the classic, seat-based, ARR-centric SaaS model faces structural margin and retention pressure in an AI-native world.

In many ways, we’ve been here before: during the cloud transition of the 2000s, incumbents and new entrants alike grappled with a major technological shift that forced everyone to rethink their go-to-market, pricing, and delivery models. Many incumbents - Oracle, SAP, IBM - deftly navigated this shift and have since risen to new heights, while younger standouts like Amazon, Google, and Salesforce helped define the new normal. Along the way, dozens of companies that are now fixtures in the software firmament were born cloud-native and the market is stronger for it.

We expect a similar pattern in the AI era. As AI becomes table-stakes functionality, both incumbents and start-ups will need to revisit their commercial and product strategies. Usage- and outcome-based pricing will gain prominence as vendors look to contain inference-driven COGS, and robust implementation approaches will be critical as enterprises work to unlock AI’s promise inside complex legacy stacks.

None of this necessarily spells the end of SaaS, but it clearly signals an evolution. As B2B fintech and software investors, we are spending a lot of time thinking about where enterprise value will accrue in a post-AI world. Two models continue to stand out: (a) businesses whose revenue scales automatically with economic volume, insulating margins from inference costs, or (b) solutions that embed so deeply in high-stakes workflows that dislodging them would require re-plumbing an entire organization. Firms that achieve either (or both) should be well positioned to thrive as the ground shifts under the traditional SaaS playbook.

Thesis Part 1: Owning Flow

Asset-Based Platforms with Scalable Fees

If deeply integrated AI systems win by owning depth, the complementary play wins by owning the flow: platforms that clip a fractional fee every time money moves, assets grow, or credit is extended.

1. Revenue that rides the economic tide.

Whether it is advisory fees on assets under management, interchange on payments, or take-rates on loan origination, these businesses grow automatically with transaction volume and price inflation. BCG’s 2025 global fintech report shows 60% of all fintech revenue (~$231B) already comes from these toll-roads, compounding 21 % YoY - more than double traditional bank fee growth.

2. SaaS-like margins, minus the inference drag.

Once the core rails are in place, servicing an incremental dollar of AUM or payment volume costs pennies. AI supercharges the model by automating fraud review, reconciliation, and compliance - turning humans into exception handlers and pushing gross margins toward the high-70s without the variable inference bill that hammers AI-heavy SaaS.

3. Built-in NDR through market appreciation.

A traditional SaaS vendor depends on seat expansion; an asset platform’s revenue grows whenever customer wealth rises or transaction velocity accelerates. Capgemini’s World Wealth Report 2025 notes global HNWI assets rose 6% last year alone. Combine that with even modest 3–4 % GDP growth in an AI-boosted economy and fee income compounds dramatically - even before new customers arrive. If AI productivity gains drive GDP growth to the 7%+ range that Ark Invest projects, the NDR profile of these companies could become far superior to even top SaaS companies today.

4. Two secular tailwinds create a decade-long land-grab.

- The Great Wealth Transfer. Roughly $90T in U.S. household assets will shift from Boomers to Millennials/Gen Z. Custodians and RIAs positioned as destination accounts for that capital inherit multi-decade client lifetimes.

- Everything-as-a-transaction. Embedded-finance forecasts project $7.2T in global volume by 2030 as payments and lending rails disappear into software. Each embedded node is a new tollbooth.

5. Defensibility lives in licenses, balance sheets, and integrations.

Processing money is not a pure-software game; it requires regulatory approvals, capital, risk models, and deep hooks into client systems. These frictions slow fast followers in the way forward-deployed engineers slow them on the workflow side. Investors should favor platforms that:

- Hold hard-to-obtain charters or issuer licenses

- Sit at multiple points in the flow (custody and payments; brokerage and advisory)

- Own proprietary risk or transaction data that sharpens underwriting and dynamic pricing

6. Risk and underwriting considerations.

Volume is cyclical: recession-driven asset drawdowns or spending slow-downs hurt top-line immediately. The hedge is diversification - across asset classes, geographies, and fee types - and a cost base that flexes down when throughput falls (AI-enabled ops make this easier).

Bottom line: Asset-based platforms recreate everything investors loved about classic SaaS - high margins, negative working capital, sticky relationships - without the AI inference tax and with upside leverage to both inflation and economic expansion. In a portfolio paired with workflow-depth winners, they provide uncorrelated, macro-linked compounding that can outlast the hype cycles of any single technology wave.

Thesis Part 2: Owning Depth

Deeply Integrated, Intelligent Systems

The second investable archetype wins by owning the entire workflow, not by selling discrete “AI features.” These companies look less like traditional product vendors and more like modern, software-powered consultancies. Forward-deployed engineering teams embed with a handful of category leaders, rebuild mission-critical workflows around domain-tuned intelligent processes, and only then productize what they have learned.

1. Start at the top, not the middle.

Mid-market logos may be tempting proof-points, but they seldom yield the data rights, integration access, or budget scope needed to build an enduring moat. Instead, the winning pattern is to secure a handful of enterprise anchors capable of paying seven- or eight-figure ACVs at scale. The payoff is outsized: large enterprises possess the richest proprietary datasets and the most painful legacy entropy, so even a single deployment can lay the foundation for a differentiated company.

2. Forward-deployed engineers as the wedge.

Borrowing from Palantir’s “field ops” model, elite engineers sit on-site (or virtual-desk-adjacent) with client subject-matter experts. In the beginning, this may just be the founders as they work to build a deep understanding of their clients’ needs. Their remit is broader than shipping code; they work with real users, deftly handle bureaucratic obstacles, and improve processes in real time. This consultative intimacy does three things:

- Surfaces edge-case data that traditional multi-tenant SaaS vendors never see

- Collapses the customer’s change-management pain, the single biggest blocker to AI adoption and ROI

- Creates a human relationship moat - successive vendors cannot simply “API in” and displace the incumbent

3. Productization through repetition.

Once the first enterprise is live, the playbook turns experience into code: reusable integration adapters, fine-tuned models, and governance templates. Each new customer onboards faster, allowing the company gradually to reduce the services ratio while preserving high-gross-margin software revenue. Bessemer’s 2024 Vertical-AI cohort shows exactly this curve - 400 % YoY growth while keeping ~65 % GM.

4. The triple-capture revenue engine.

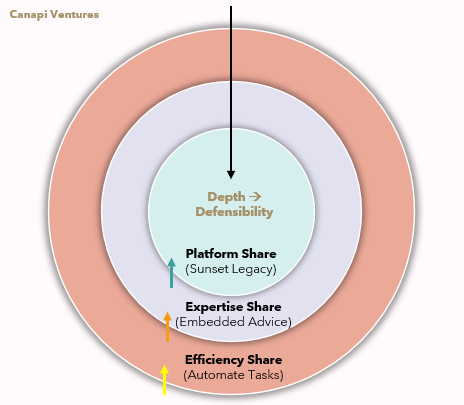

Intelligent systems vendors embed so deeply in a customer’s workflow that they unlock three, ever-richer budgets as adoption matures:

- Efficiency Share: replace manual labor and swivel chair operations, charge per automated task or a percent of cost savings

- Expertise Share: replace $1,000-per-hour slide-ware with living software and empathetic advice, charge a fraction of incumbent consulting firms and operate at higher margin

- Platform Share: as the abstraction layer matures, replace legacy software systems entirely; overtime can capture a meaningful portion of customer’s entire OpEx

The sequence matters: automation proves ROI, embedded expertise earns trust, and trust grants permission to sunset incumbents. Once all three layers accrue, revenue scales faster than headcount and churn risk collapses.

Palantir’s commercial book - on pace to cross $1B, up >70% YoY - illustrates how quickly the capture stack compounds once the abstraction layer becomes indispensable. ServiceNow’s 2025 AI Maturity Index reports a 3× revenue delta between “AI pacesetters” (who take this full-stack approach) and laggards.

5. Risk and underwriting considerations.

Sales cycles are long, deployments are bespoke, and delivery failure is binary. Much of the early operations will not be scalable and gross margins may be low for much longer than traditional SaaS, potentially compressing valuation or making fundraising challenging. These pressures can be mitigated by:

- Remaining as lean as possible through first enterprise deployment – a nimble team of cofounders and 1-2 engineers should be sufficient to validate the approach

- Requiring outcome-linked pricing to preserve upside

- Tracking margin progression: services → software mix should improve each cohort

- Valuing proprietary data rights as a primary asset; they are the flywheel that lets later implementations move from consultative to near-turn-key

Bottom line: In an era where front-end features commoditize at the speed of model-provider announcement cycles, depth - not breadth - creates moats. Companies that embed forward-deployed engineers with top-quartile enterprises, codify those learnings into reusable systems, and expand via triple-capture can graduate from “smart consultants” to multi-billion-dollar workflow owners.

What We Believe and How We’re Investing

AI is overturning some of the economic assumptions that powered the first two decades of SaaS, yet the model itself is far from obsolete. History shows that incumbents who refresh their product, pricing, and go-to-market playbooks can emerge stronger after platform shifts - and we expect the same this time around.

Still, the AI wave will not lift all boats. Seat-based vendors that ignore variable inference costs or cling to rigid pricing will feel margin pressure. Marginal products that rely on vendor lock-in and pricing power to drive NDR will be replaced by better products at a fraction of the cost. But for the right teams — with the right architecture, GTM motion, and customer empathy — the upside may be unprecedented.

It is our belief that these two thesis areas — scalable asset platforms and deeply integrated intelligent systems — represent the most compelling near- and mid-term opportunities for investors and builders focused on financial services and enterprise software.

We’re actively seeking to meet founders who share this orientation: those building infrastructure that scales with money flows, or product that embeds deep enough to become indispensable. If you’re building in one of these spaces or want to compare notes, please reach out!

-----

Sources and Additional Reading:

- Bessemer Venture Partners — Part I: The Future of AI Is Vertical (Sept 2024)

- LinkedIn / Sammy Abdullah — Q1 2025 Net Dollar Retention Trends Across Public SaaS Companies (June 2025)

- Boston Consulting Group — Fintech’s Next Chapter: Scaled Winners and Emerging Disruptors (PDF) (May 2025)

- Forrester — Predictions 2025: A Year of Reckoning for Enterprise Application Vendors (October 2024)

- Monetizely — AI Pricing: How Much Does AI Cost in 2025? (May 2025)

- Theory Ventures / Tomasz Tunguz — How Much Does It Cost to Use an LLM? (November 2023)

- a16z Fintech Newsletter — CFO Roundtable: AI Growth, Pricing & Forecasting (June 2025)

- Klover.ai — Microsoft’s AI Strategy: Analysis of Dominance and Risk in the Agentic Economy (July 2025)

- Palantir Blog — Inside Look: The Baseline Team & Forward-Deployed Infrastructure Engineering (December 2024)

- Barry McCardel — Understanding “Forward-Deployed Engineering” and Why Your Company Probably Shouldn’t Do It (November 2024)

- Palantir Investor Relations — Q1 2025 Investor Presentation (PDF) (May 2025)

- Bain Capital Ventures — Gross Margin Is a BS Metric (August 2024)

- Bessemer Venture Partners – State of the Cloud 2024 (June 2024)

- ServiceNow – Enterprise AI Maturity Index 2025 (July 2025)

- CapGemini – World Wealth Report 2025 (June 2025)

- Mambu / AWS – The Next Digital Revolution (August 2023)

- Ark Invest – Technology Could Accelerate GDP Growth to 7% Per Year (March 2024)

- a16z – The New Business of AI and How It’s Different From Traditional Software (February 2020)

- a16z – The Case for Services in Enterprise Software Startups (March 2018)

- Zuora & BCG – How Consumption Models Contribute to Business Success (2023)

- F Prime – RIP Old VC Playbook: How Investors Are Changing AI Startups Evaluation (June 2025)

- LEK – How AI is Redefining SaaS Metrics and Forecasting (July 2025)

- Dave Kellogg – The Impact of AI on SaaS Metrics (October 2024)

- Forrester – Predictions 2025: A Year of Reckoning for Enterprise Application Vendors (October 2024)

- Wall Street Journal – No One Knows How to Price AI Tools (February 2025)

- CIO – 2025 AI Infrastructure: What the Data is Telling Leaders Now (June 2025)

- Ed Zitron – The Hater’s Guide to the AI Bubble (July 2025)